Admin Participation Marks

To receive full 5 marks allocated for participation, meet the criteria A, B, and C.

A Earned more than half of weekly participation points in at least 10 weeks.

- Weekly quiz(es), if any:

- Quizzes open around the lecture time and stay open until the next lecture starts. In some weeks, there will be two quizzes (because two smaller quiz is easier for you to manage than one big quiz).

- When awarding participation points for quizzes, we look for two conditions:

- Condition 1: submitted early i.e., within four days of the lecture i.e., lecture day + three more days (reason: to encourage learning the weekly topics before doing the weekly tasks)

- Condition 2: answered correctly i.e., least 70% of the answers are correct (reason: to discourage random answers)

- You earn:

- 3 points if you satisfy both conditions.

- 2 points if only one of the conditions is satisfied.

- 1 point if submitted but both conditions are not satisfied.

- TEAMMATES peer evaluation sessions: 2 points per session

- Other weekly activities:

- There could be other activities related to the lecture, tutorial, or the administration of the module.

- Refer the activity description for evaluation criteria.

- Each activity earns 2 points unless specified otherwise.

B Received good peer evaluations

- -1 for each professional conduct criterion in which you score below average (based on the average of ratings received).

Admin Peer Evaluations → Criteria (Conduct)

- Evaluated based on the following criteria, on a scale

Poor/Below Average/Average/Good/Excellent:

Peer Evaluation Criteria: Professional Conduct

- Professional Communication :

- Communicates sufficiently and professionally. e.g. Does not use offensive language or excessive slang in project communications.

- Responds to communication from team members in a timely manner (e.g. within 24 hours).

- Punctuality: Does not cause others to waste time or slow down project progress by frequent tardiness.

- Dependability: Promises what can be done, and delivers what was promised.

- Effort: Puts in sufficient effort to, and tries their best to keep up with the module/project pace. Seeks help from others when necessary.

- Quality: Does not deliver work products that seem to be below the student's competence level i.e. tries their best to make the work product as high quality as possible within her competency level.

- Meticulousness:

- Rarely overlooks submission requirements.

- Rarely misses compulsory module activities such as pre-module survey.

- Teamwork: How willing are you to act as part of a team, contribute to team-level tasks, adhere to team decisions, etc. Honors all collectively agreed-upon commitments e.g., weekly project meetings.

- No penalty for scoring low on competency criteria.

Admin Peer Evaluations → Criteria (Competency)

- Considered only for bonus marks, A+ grades, and tutor recruitment

- Evaluated based on the following criteria, on a scale

Poor/Below Average/Average/Good/Excellent:

Peer Evaluation Criteria: Competency

- Technical Competency: Able to gain competency in all the required tools and techniques.

- Mentoring skills: Helps others when possible. Able to mentor others well.

- Communication skills: Able to communicate (written and spoken) well. Takes initiative in discussions.

C Tutorial attendance/participation not too low

Low attendance/participation can affect participation marks directly (i.e., attended fewer than 7) or indirectly (i.e., it might result in low peer evaluation ratings).

+ Bonus Marks

In addition, you can receive bonus marks in the following ways. Bonus marks can be used to top up your participation marks but only if your marks from the above falls below 5.

- [For lecture participation] Participated in lecture activities (e.g., in lecture polls/quizzes) in at least 10 lectures: 1 mark

- [For perfect peer ratings] Received good ratings for all 10 peer evaluations criteria: 1 mark

- [For helping classmates] Was very helpful to classmates e.g., multiple helpful posts in forum: 1 mark

Examples:

- Alicia earned 1/2, 3/5, 2/5, 5/5, 5/5, 5/5, 5/5, 5/5, 5/5, 5/5, 4/5, 5/5 in the first 12 weeks. As she received at least half of the points in 11 of the weeks, she gets 5 participation marks. Bonus marks are not applicable as she has full marks already.

- Benjamin managed to get at least half of the participation points in 9 weeks only, which gives him 5-1 = 4 participation marks. But he participated in 10 lectures, and hence get a bonus mark to make it 5/5.

- Chun Ming met the participation points bar in 8 weeks only, giving him 5-2 = 3 marks. He lost 2 more marks because he received multiple negative ratings for two criteria, giving him 1/5 participation marks.

Where to find your participation marks progress

Your participation progress can be tracked in this page from week 3 onward.

Admin Individual Project (iP) Grading

Total: 20 marks

Implementation [10 marks]: Requirements to get full marks:

- Achieve more than 90% of all deliverables by the end.

- Requirements marked as optional or if-applicable are not counted when calculating the percentage of deliverables.

- When a requirement specifies a

minimalversion of it, simply reaching that minimal version of the requirement is enough for it to be counted for grading -- however, we recommend you to go beyond the minimal; the farther you go, the more practice you will get.

- The code quality meets the following conditions:

- Reasonable use of OOP e.g., at least some use of inheritance, code divided into classes in a sensible way

- No blatant violations of the coding standard

- At least some errors are handled using exceptions

- At least half of public methods/classes have javadoc comments

- The code is neat e.g., no chunks of commented out code

- Reasonable use of SLAP e.g., no very-long methods or deeply nested code

- Has some JUnit tests

Project Management [5 marks]: To get full marks, you should achieve,

- Submit some deliverables in at least 4 out of the 5 iP weeks (i.e., week 2 - week 6)

- Follow other requirements specified (e.g., how to use Git/Github for each increment, do peer reviews) in at least 4 weeks

Documentation [5 marks]: To get full marks, you should achieve,

- The product web site and the user guide is reasonable (i.e., functional, not hard to read, covers all features, no major formatting errors in the published view).

You can monitor your iP progress (as detected by our scripts) in the iP Progress Dashboard page.

Admin Team Project (tP) Grading

Note that project grading is not competitive (not bell curved). CS2103T projects will be assessed separately from CS2103 projects. Given below is the marking scheme.

Total: 45 marks ( 35 individual marks + 10 team marks)

See the sections below for details of how we assess each aspect.

1. Project Grading: Product Design [ 5 marks]

Evaluates: how well your features fit together to form a cohesive product (not how many features or how big the features are) and how well does it match the target user

Evaluated by:

- tutors (based on product demo and user guide)

- peers from other teams (based on peer testing and user guide)

Admin tP → PE → Grading Instructions for Product Design

Evaluate based on the User Guide and the actual product behavior.

| Criterion | Unable to judge | Low | Medium | High |

|---|---|---|---|---|

target user |

Not specified | Clearly specified and narrowed down appropriately | ||

value proposition |

Not specified | The value to target user is low. App is not worth using | Some small group of target users might find the app worth using | Most of the target users are likely to find the app worth using |

optimized for target user |

Not enough focus for CLI users | Mostly CLI-based, but cumbersome to use most of the time | Feels like a fast typist can be more productive with the app, compared to an equivalent GUI app without a CLI | |

feature-fit |

Many of the features don't fit with others | Most features fit together but a few may be possible misfits | All features fit together to for a cohesive whole |

In addition, feature flaws reported in the PE will be considered when grading this aspect.

These are considered feature flaws:

The feature does not solve the stated problem of the intended user i.e., the feature is 'incomplete'

Hard-to-test features

Features that don't fit well with the product

Features that are not optimized enough for fast-typists or target users

2. Project Grading: Implementation [ 10 marks]

2A. Code quality

Evaluates: the quality of the parts of the code you claim as written by you

Evaluation method: manual inspection by tutors + automated-analysis by a script

Criteria:

-

At least some evidence of these (see here for more info)

- logging

- exceptions

- assertions

- defensive coding

-

No coding standard violations e.g. all boolean variables/methods sounds like booleans. Checkstyle can prevent only some coding standard violations; others need to be checked manually.

-

SLAP is applied at a reasonable level. Long methods or deeply-nested code are symptoms of low-SLAP.

-

No noticeable code duplications i.e. if there multiple blocks of code that vary only in minor ways, try to extract out similarities into one place, especially in test code.

-

Evidence of applying code quality guidelines covered in the module.

2B. Effort

Evaluates: how much value you contributed to the product

Method:

- Step 1: Evaluate the effort for the entire project. This is evaluated by peers who tested your product, and tutors.

Admin tP → PE → Questions used for Implementation Effort

0..20] e.g., if you give 8, that means the team's effort is about 80% of that spent on creating AB3. We expect most typical teams to score near to 10.

- Do read the DG appendix named

Effort, if any. - Consider implementation work only (i.e., exclude testing, documentation, project management etc.)

- Do not give a high value just to be nice. Your responses will be used to evaluate your effort estimation skills.

- Step 2: Evaluate how much of that effort can be attributed to you. This is evaluated by team members, and tutors.

Admin Peer Evaluations → Questions used for Evaluating Implementation Effort

Uses the Equal Share +/- N% scale for the answer

- Baseline: If your team received a value higher than

10in step 1 and the team agrees that you did roughly an equal share of implementation work, you should receive full marks for effort.

3. Project Grading: QA [ 10 marks]

3A. Developer Testing:

Evaluates: How well you tested your own feature

Based on:

- functionality bugs in your work found by others during the Practical Exam (PE)

- your test code (note our expectations for automated testing)

- Expectation Write some automated tests so that we can evaluate your ability to write tests.

🤔 How much testings is enough? We expect you to decide. You learned different types of testing and what they try to achieve. Based on that, you should decide how much of each type is required. Similarly, you can decide to what extent you want to automate tests, depending on the benefits and the effort required.

There is no requirement for a minimum coverage level. Note that in a production environment you are often required to have at least 90% of the code covered by tests. In this project, it can be less. The weaker your tests are, the higher the risk of bugs, which will cost marks if not fixed before the final submission.

These are considered functionality bugs:

Behavior differs from the User Guide

A legitimate user behavior is not handled e.g. incorrect commands, extra parameters

Behavior is not specified and differs from normal expectations e.g. error message does not match the error

3B. System/Acceptance Testing:

Evaluates: How well you can system-test/acceptance-test a product

Based on: bugs you found in the PE. In addition to functionality bugs, you get credit for reporting documentation bugs and feature flaws.

Grading bugs found in the PE

- Of Developer Testing component, based on the bugs found in your code3A and System/Acceptance Testing component, based on the bugs found in others' code3B above, the one you do better will be given a 70% weight and the other a 30% weight so that your total score is driven by your strengths rather than weaknesses.

- Bugs rejected by the dev team, if the rejection is approved by the teaching team, will not affect marks of the tester or the developer.

- The penalty/credit for a bug varies based on the severity of the bug:

severity.High>severity.Medium>severity.Low>severity.VeryLow - The three types (i.e.,

type.FunctionalityBug,type.DocumentationBug,type.FeatureFlaw) are counted for three different grade components. The penalty/credit can vary based on the bug type. Given that you are not told which type has a bigger impact on the grade, always choose the most suitable type for a bug rather than try to choose a type that benefits your grade. - The penalty for a bug is divided equally among assignees.

- Developers are not penalized for duplicate bug reports they received but the testers earn credit for duplicate bug reports they submitted as long as the duplicates are not submitted by the same tester.

- i.e., the same bug reported by many testersObvious bugs earn less credit for the tester and slightly higher penalty for the developer.

- If the team you tested has a low bug count i.e., total bugs found by all testers is low, we will fall back on other means (e.g., performance in PE dry run) to calculate your marks for system/acceptance testing.

- Your marks for developer testing depends on the bug density rather than total bug count. Here's an example:

nbugs found in your feature; it is a big feature consisting of lot of code → 4/5 marksnbugs found in your feature; it is a small feature with a small amount of code → 1/5 marks

- You don't need to find all bugs in the product to get full marks. For example, finding half of the bugs of that product or 4 bugs, whichever the lower, could earn you full marks.

- Excessive incorrect downgrading/rejecting/marking as duplicatesduplicate-flagging, if deemed an attempt to game the system, will be penalized.

4. Project Grading: Documentation [ 10 marks]

Evaluates: your contribution to project documents

Method: Evaluated in two steps.

- Step 1: Evaluate the whole UG and DG. This is evaluated by peers who tested your product, and tutors.

Admin tP → PE → Grading Instructions for User Guide

Evaluate based on fit-for-purpose, from the perspective of a target user.

For reference, the AB3 UG is here.

Admin tP → PE → Grading Instructions for Developer Guide

Evaluate based on fit-for-purpose from the perspective of a new team member trying to understand the product's internal design by reading the DG.

For reference, the AB3 DG is here.

- Step 2: Evaluate how much of that effort can be attributed to you. This is evaluated by team members, and tutors.

Admin Peer Evaluations → Questions used for Evaluating the Contribution to the UG

Admin Peer Evaluations → Questions used for Evaluating the Contribution to the DG

Uses the Equal Share +/- N% scale for the answer

- In addition, UG and DG bugs you received in the PE will be considered for grading this component.

These are considered UG bugs (if they hinder the reader):

Use of visuals

- Not enough visuals e.g., screenshots/diagrams

- The visuals are not well integrated to the explanation

- The visuals are unnecessarily repetitive e.g., same visual repeated with minor changes

Use of examples:

- Not enough or too many examples e.g., sample inputs/outputs

Explanations:

- The explanation is too brief or unnecessarily long.

- The information is hard to understand for the target audience. e.g., using terms the reader might not know

Neatness/correctness:

- looks messy

- not well-formatted

- broken links, other inaccuracies, typos, etc.

- hard to read/understand

- unnecessary repetitions (i.e., hard to see what's similar and what's different)

These are considered DG bugs (if they hinder the reader):

Those given as possible UG bugs ...

These are considered UG bugs (if they hinder the reader):

Use of visuals

- Not enough visuals e.g., screenshots/diagrams

- The visuals are not well integrated to the explanation

- The visuals are unnecessarily repetitive e.g., same visual repeated with minor changes

Use of examples:

- Not enough or too many examples e.g., sample inputs/outputs

Explanations:

- The explanation is too brief or unnecessarily long.

- The information is hard to understand for the target audience. e.g., using terms the reader might not know

Neatness/correctness:

- looks messy

- not well-formatted

- broken links, other inaccuracies, typos, etc.

- hard to read/understand

- unnecessary repetitions (i.e., hard to see what's similar and what's different)

Architecture:

- Symbols used are not intuitive

- Indiscriminate use of double-headed arrows

- e.g., the sequence diagram showing interactions between main componentsarchitecture-level diagrams contain lower-level details

- Description given are not sufficiently high-level

UML diagrams:

- Notation incorrect or not compliant with the notation covered in the module.

- Some other type of diagram used when a UML diagram would have worked just as well.

- The diagram used is not suitable for the purpose it is used.

- The diagram is too complicated.

Code snippets:

- Excessive use of code e.g., a large chunk of code is cited when a smaller extract of would have sufficed.

Problems in User Stories. Examples:

- Incorrect format

- All three parts are not present

- The three parts do not match with each other

- Important user stories missing

Problems in Use Cases. Examples:

- Formatting/notational errors

- Incorrect step numbering

- Unnecessary UI details mentioned

- Missing/unnecessary steps

- Missing extensions

Problems in NFRs. Examples:

- Not really a Non-Functional Requirement

- Not scoped clearly (i.e., hard to decide when it has been met)

- Not reasonably achievable

- Highly relevant NFRs missing

Problems in Glossary. Examples:

- Unnecessary terms included

- Important terms missing

5. Project Grading: Project Management [ 5 + 5 = 10 marks]

5A. Process:

Evaluates: How well you did in project management related aspects of the project, as an individual and as a team

Based on: tutor/bot observations of project milestones and GitHub data

Grading criteria:

- Project done iteratively and incrementally (opposite: doing most of the work in one big burst)

- Milestones reached on time (i.e., the midnight before of the tutorial) (to get a good grade for this aspect, achieve at least 60% of the recommended milestone progress).

- Good use of GitHub milestones

- Good use of GitHub release mechanism

- Good version control, based on the repo

- Reasonable attempt to use the forking workflow

- Good task definition, assignment and tracking, based on the issue tracker

- Good use of buffers (opposite: everything at the last minute)

5B. Team-tasks:

Evaluates: How much you contributed to team-tasks

Admin tP → Expectations: Examples of team-tasks

Here is a non-exhaustive list of team-tasks:

- Setting up the GitHub team org/repo

- Necessary general code enhancements e.g.,

- Work related to renaming the product

- Work related to changing the product icon

- Morphing the product into a different product

- Setting up tools e.g., GitHub, Gradle

- Maintaining the issue tracker

- Release management

- Updating user/developer docs that are not specific to a feature e.g. documenting the target user profile

- Incorporating more useful tools/libraries/frameworks into the product or the project workflow (e.g. automate more aspects of the project workflow using a GitHub plugin)

Based on: peer evaluations, tutor observations

Grading criteria: Do these to earn full marks.

- Do close to an equal share of the team tasks (you can earn bonus marks by doing more than an equal share).

- Merge code in at least four of weeks 7, 8, 9, 10, 11, 12

Admin Exams

There is no midterm exam. Information about the final exam is given below.

- The final exam will be as per the normal exam schedule, and will count for 30% of the final grade.

- The exam will be done online.

- We will be following the SoC's E-Exam SOP, combined with the deviations/refinements given in the section below. Please read the SOP carefully and ensure you follow all instructions.

SOP deviations/refinements

-

Tools: LumiNUS, Zoom, Microsoft Teams (MST), PDF scanner, PDF reader.

-

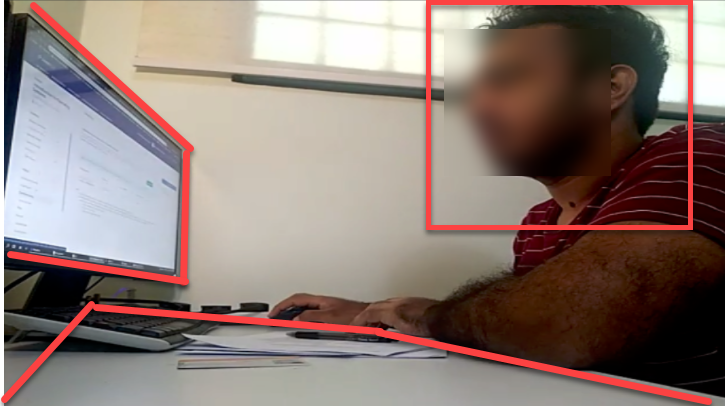

The webcam view should capture all three of these: your upper body (side view), the entire screen area of your monitor, the working area of the table. Here is an example:

-

Recording of your PC screen is not required.

-

Only one computer screen is allowed.

-

Not allowed to use the printer or other devices during the exam.

-

Soft copies of notes: only PDF format is allowed. Other formats (e.g., MS Word, .txt, html) are not allowed. No limitation on what the PDF file contains or the number of PDF files to be used.

You may use any hard copies or written materials too. -

The Browser should only be used to access LumiNUS. Accessing other websites (including the module website) is not allowed.

It turns out that the textbook PDF file plays better with browsers than PDF viewers. Therefore, viewing the textbook PDF in the browser is allowed. But other PDFs should be opened in a PDF viewer.

The reason for restricting the use of the browser to view PDF files is that allowing it makes it harder for invigilators to detect students accessing unauthorized websites. -

Use Microsoft Teams or Zoom private messages to communicate with the invigilator.

-

The quiz will not appear on LumiNUS until a few minutes before we release the password. Wait until we announce that the quiz is available to see.

-

When the invigilator asks you to do an identity check, turn your face towards the camera, move closer to the camera, remove face mask (if any), and hold the pose until the invigilator tells you to go back to your working position.

-

If you have a doubt/query about a question, or want to make an assumption about a question, please write it down in the 'justification' text box. Do not try to communicate those with the invigilator during the exam. We'll take your doubt/query/assumption into account when grading. For example, if many had queries about a specific question, we can conclude that the question is unclear and omit it from grading.

-

If you encounter a serious problem that prevents you from proceeding with the exam (e.g., the password to open the quiz doesn't work), PM the invigilator using MS Teams (failing that, use Zoom chat).

-

If your computer crashed/restarted during the exam, try to get it up again and resume the exam. LumiNUS will allow you to resume from where you stopped earlier. However, note that there is a deadline to finish the quiz and you will overrun that deadline if you lose more than 5 minutes due to the computer outage.

-

The zoom link and the invigilator info will be distributed via LumiNUS gradebook at least 24 hours before the exam.

Format

- The exam will be divided into 3 parts.

Final exam - part 1

- A LumiNUS quiz containing 16 MCQ questions. All questions are estimated to be equal size/difficulty.

- You only need to answer 15 questions correctly to get full marks. The extra question is there to cushion you against careless mistakes or misinterpreting a question.

- Questions will appear in random order.

- You will not be able to go back to previous questions.

Reasons:

1. to minimize opportunities for collusion

2. not unreasonable for the materials tested and the proficiency level expected -- i.e., when using this knowledge in a real life SE project discussion, it will be rare for you to go back to revise what you said earlier in the discussion - Duration: 35 minutes (recommended: allocate 2 minutes per question, which gives you a 3 minutes buffer)

- You are required to give a justification for your answer. The question will specify what should be included in the justification. Answers without the correct justification may not earn full marks. However, we'll give full marks up to two correct answers (per 16 questions) that do not have justifications (to cater for cases where you accidentally proceeded to the next question before adding the justification).

- Here is an example question. The answer is

aand the justification can beOOP is only one of the choices for an SE project.

Choose the incorrect statement.

[Justification: why is it incorrect?]

-

Almost all questions will ask you to choose the INCORRECT statement and justify why it is incorrect.

-

There will be a 5-minutes toilet break after this part

Final exam - part 2

- You will be asked to draw some UML diagrams, to be hand-drawn on paper (not on a tablet). You may use pencils if you wish.

- Duration: 20 minutes

- The questions will be in an encrypted PDF file that will be given to you in advance. The password will only be given at the start of this section.

- At the end of the exam (i.e., after all three parts are over), you will upload a scanned copy to LumiNUS. Do not do any scanning/uploading at this time.

- These diagrams will not be graded directly. Instead, you will use them when answering part 3 of the exam.

However, we may use the diagrams to give some consolation marks should you score very low in the corresponding MCQ questions.

Final exam - part 3

- Similar to part 1 (e.g., 16 questions, same length).

- Some questions will refer to the UML diagrams that you drew in part 2.

- You may modify your UML diagrams during this time. Reminder: diagrams are not graded.

- You may refer the PDF file used in part 2 during this part too.

- Show the diagram to the camera at the end of this part, when the examiner asks you to.

- Due to the above point, you will have to stay back in Zoom until the full exam is over (not allowed to leave early).

- Due to the above point, you may want to have something to read, in case you finish early. You are not allowed to use other gadgets or use the computer to do other things even if you have finished the exam.

- After the exam, scan and upload the diagrams you drew in part 2 onto LumiNUS, as a single PDF file, within an hour. The file name does not matter.

Exam briefing, mock exam, practice exam paper

- There will be an exam briefing in the penultimate lecture. It will include a minimal mock exam, just to help you understand the structure.

- You will be given a practice exam paper (half the size of the full paper) to help you practice timing. That practice paper will be released at least one week before the exam.